Are you at risk of an AI romance scam this Valentine’s Day?

Deepfakes and AI chatbots are transforming romance scams into an industrial-scale threat that extracts millions from Australian victims each year

With Valentine’s Day around the corner, many people turn to dating apps and social media in search of connection, companionship and perhaps that perfect romantic relationship. But lurking behind profile pictures and persuasive messages is a darker reality that transforms the season of hearts and flowers into a hunting ground for sophisticated criminals.

Romance scammers are no longer working with stolen photographs and clumsy scripts. They’re deploying artificial intelligence that operates around the clock, generating deepfake videos that smile on command while chatbots maintain dozens of personalised conversations simultaneously – each one calibrated to exploit the specific vulnerabilities of lonely hearts.

The timing is deliberate. Romance scam activity spikes around Valentine’s Day as scammers capitalise on the emotional vulnerabilities of people seeking their perfect partner. What makes 2026 different from previous years is the technological sophistication now available to even amateur fraudsters, creating what security researchers describe as an industrial-scale threat that extracts millions of dollars from Australians every year and more than $US600 million from US citizens. On an individual level, the financial losses of Australians who fall victim to such scams average almost $12,000.

How scammers are using AI to power romance scams

What’s changed in recent times is not the fundamental con of a romance scam, but the technology powering it, according to Dr Lesley Land, a Senior Lecturer in the School of Information Systems and Technology Management at UNSW Business School.

“Deepfakes enable romance fraud by easing the ability of perpetrators to generate fraudulent profiles quickly,” said Dr Land, whose research interests include cybercrimes, identity frauds/misuses, romance scams and investment scams.

“Additionally, agentic AI is revolutionising romance fraud by enabling scammers to move beyond simple phishing to creating highly sophisticated, automated, and emotionally manipulative, long-term deceptions.

“Traditional methods relied on persuasion techniques by manipulating characteristics such as credibility, reciprocity, authority and scarcity on profile images and descriptions. Once initiated, these scams require constant human input for continuous grooming.”

Learn more: When AI becomes a weapon in the cybersecurity arms race

Dr Land said modernised approaches using agentic AI can independently plan, act, and learn to maintain fake relationships, build trust, and eventually persuade victims to part with their money. “Multiple techniques (including AI), when combined, increase the capacity of creating fraudulent dating profiles that enhance hyper-personal communications between daters,” she said.

Dr Land noted that the quality of deepfakes varies widely, from highly realistic to low-quality. “Therefore, we cannot guarantee that these technologies have reached a point of trusted authenticity that cannot be detected most of the time,” she said.

The stakes are significant for those seeking romantic connections online. Cybersecurity firm Norton has found that two in five online daters have already been targeted by dating scams, while credit reporting agency Experian says AI-enabled romance scams are so profitable that they rank among the top five fraud trends.

When seeing is no longer believing: The deepfake authenticity crisis

The subconscious alarm bells that once protected consumers from digital fraud are being disarmed through deepfake technologies, with AI-generated media bypassing our natural scepticism.

When an 82-year-old retiree sees what appears to be Elon Musk promoting an investment opportunity in a video, or when a potential romance scam victim receives a personalised video message from someone greeting them by name, the traditional heuristic of “seeing is believing” becomes dangerously obsolete.

For example, researchers from the University of California, Berkeley, recently examined a 15-second video used in a romance scam. Even for experts in deepfake detection, determining authenticity proved challenging. Research colleagues eventually traced the clip to a Russian ship engineer whose voice and mouth movements had been synthesised to speak fluent English. The victim believed she was receiving an intimate morning greeting from a potential romantic partner.

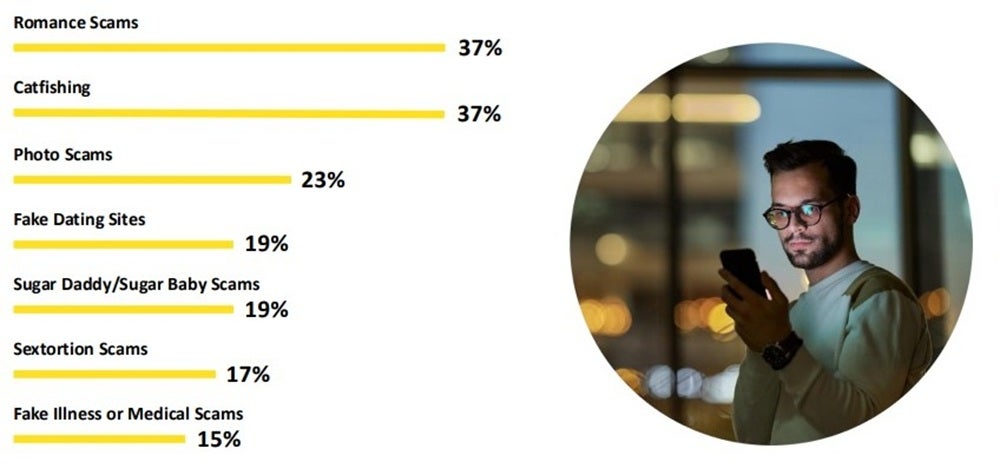

The most common types of dating scams

“The grooming phase of romance fraud is the most deceptive phase as it often exploits psychological manipulations over extended timeframes,” Dr Land said. “Hence, it is possible that realistic deepfakes can potentially forge deeper forms more quickly and can make it harder for potential victims to be sceptical and suspicious of potential romance frauds.”

Furthermore, victims who survive romance scams often report experiencing severe psychological impacts, including post-traumatic stress disorder, depression and suicidal ideation. The shame and embarrassment surrounding victimisation often prevent reporting, meaning official statistics significantly underestimate the true scale of harm.

The automation advantage: Scaling intimacy through AI

The primary danger of AI-enabled romance scams lies less in visual perfection and more in the ability to automate thousands of simultaneous, highly personalised conversations. Traditional romance scammers needed extensive interpersonal skills and time to develop fraudulent relationships.

Modern operations use large language models (LLMs) to generate emotionally resonant content tailored to each victim’s interests, communication style and psychological vulnerabilities.

These AI chatbots provide 24-hour companionship, responding within seconds with messages that adjust their tone and topic based on the victim's responses. Research from multiple universities studying romance scam psychology reveals that scammers exploit specific vulnerabilities: loneliness, impulsivity, trust in authority figures and the psychological need for consistency with previous commitments. AI accelerates this manipulation by analysing social media profiles to compile detailed dossiers on targets, identifying emotional vulnerabilities like recent divorce, bereavement or isolation.

Subscribe to BusinessThink for the latest research, analysis and insights from UNSW Business School

“When traditional persuasion techniques are combined with emerging advanced deepfake AI technologies that can invoke more alluring scams,” Dr Land explained. “We need to be cognisant that advanced AI can produce many different potential capabilities (for example, high levels of personalisation, creativity, impersonations through images, videos and conversations) through multiple mediums of communication.”

Dr Land believes experimental research is needed to test which mediums and/or forms of communication generated by deepfakes are more deceptive to our human sensory systems (such as seeing and hearing).

Starving the machine: Managing your digital footprint

Can people effectively starve AI models of training data by locking down biometric footprints on social media? The answer is nuanced. While complete digital invisibility remains impractical for most people maintaining personal and professional networks, strategically reducing publicly accessible information creates meaningful barriers for scammers.

Romance scammers use open-source intelligence-gathering techniques, scraping dating platforms, social media profiles, and public forums to harvest photographs, voice samples, and personal details. These data points feed AI systems that generate convincing synthetic media.

“While machine learning is required to train AI models, an important consideration is whether there is a way to curb machine learning training for attaining deceptive outcomes, particularly when sensitive and confidential personal data (such as biometric data) is involved, said Dr Land.

“Mitigation of all scams requires awareness and education at personal, societal and governmental levels,” said Dr Land, who explained that sharing of scam methods and cases is of paramount importance at societal, national and international levels.

Regulations across jurisdictions must also evolve to keep pace with evolving scam methods, and Dr Land called for stronger collaboration among communities, authorities, and international entities to address this growing problem worldwide.

5-question quiz: Is your online romance real or AI-generated?

1. The 24/7 conversation partner. Your new online connection provides highly sophisticated, emotionally resonant responses at any hour. They never seem tired, distracted, or forgetful about details you've shared. Their "active listening" feels almost superhuman. Should you be reassured by their attentiveness?

The reality: You may be interacting with an LLM configured for relationship-building. A Stanford University study of scam operation insiders found that 100% of interviewed scammers reported daily use of ChatGPT in their operations. In controlled experiments, LLM-powered scam agents elicited significantly greater trust from participants than human operators and achieved higher compliance rates (46% vs 18% for humans). The study documented that 87% of scam labour consists of "systematised conversational tasks readily susceptible to automation".

Learn more: Cracking the code: why people fall for scams

2. The perfect mirror. Your new interest remarkably shares every hobby, political view, and travel preference you've ever mentioned publicly. They even reference photos or posts from years ago. Is this your potential soulmate, or something else?

The reality: This indicates "algorithmic affinity manipulation". AI tools can scrape and analyse years of public social media data within seconds to construct a "perfect match" persona. The Stanford study documented this as routine practice: scammers use LLMs for "context-aware reply generation" by feeding entire chat histories and scraped social media data into the system. This manufactured compatibility is designed to lower cognitive defences and accelerate emotional attachment.

3. Video call inconsistencies. During a video call, you notice slight blurring around their mouth when speaking, facial "resetting" during head turns, or unnatural hand movements across their face. Are these red flags, or internet connection issues?

The reality: These are potential deepfake rendering artifacts, though 2024-2025 technology has significantly improved. A benchmark study found that commercial deepfake detectors achieve only ~78% accuracy on real-world synthetic media, with academic models performing substantially worse. The study noted that detectors "particularly struggle with artifacts from recent diffusion-based synthesis" methods. Human detection rates for high-quality video deepfakes average just 24.5%. However, simple artifact detection (blurred mouths, hand-wave tests) is increasingly unreliable as generative AI technology evolves faster than detection methods.

Learn more: As cyberthreats evolve, businesses need more than just tech solutions

4. The voice message test. You receive an urgent voice note. The voice has the exact accent, tone, and emotional inflections you expect. Does this prove authenticity?

The reality: Not necessarily. Modern voice cloning systems achieve remarkably high fidelity with minimal audio samples. Microsoft's VALL-E 2 demonstrated "human parity" in voice synthesis using just 3 seconds of reference audio. Research has confirmed that current voice cloning technology requires as little as 3-10 seconds of high-quality audio for basic quality cloning, with professional results achievable from 30 seconds of audio. While the "3 seconds" claim is technically accurate for basic cloning, quality varies significantly depending on the audio source, background noise, and the specific technology used.

5. The reverse-image dead end. Reverse-image searches on their profile pictures return zero results – no social media, no old photos, nothing. They claim to be "just private." What does the research say?

The reality: The photos are potentially GAN-generated (Generative Adversarial Networks), meaning they depict non-existent people. StyleGAN and similar technologies create photorealistic synthetic faces that will never appear in reverse-image searches because the AI synthesised a novel face from scratch. Research on GAN-generated faces shows detection systems can achieve >90% accuracy under controlled conditions, but human detection rates are poor – studies show people struggle to identify even older GAN faces, with performance not improving when participants are told synthetic faces are present.